Facial Recognition

Every police force in the country is using dangerous facial recognition technology that allows them to ID and track any one of us. We urgently need safeguards to protect us from it as we go about our daily lives.

What’s happening?

Police forces in the UK have used facial recognition in one form or another since 2015. And despite Liberty client Ed Bridges winning the world’s first legal challenge to police use of the technology in 2020, every force in the country is now using it.

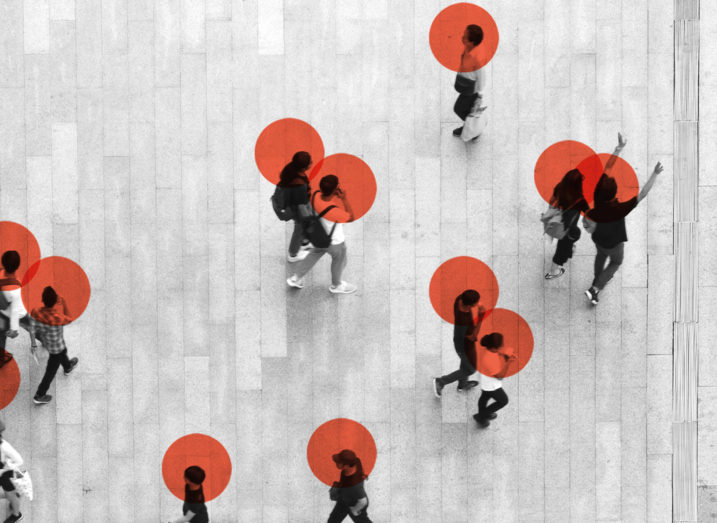

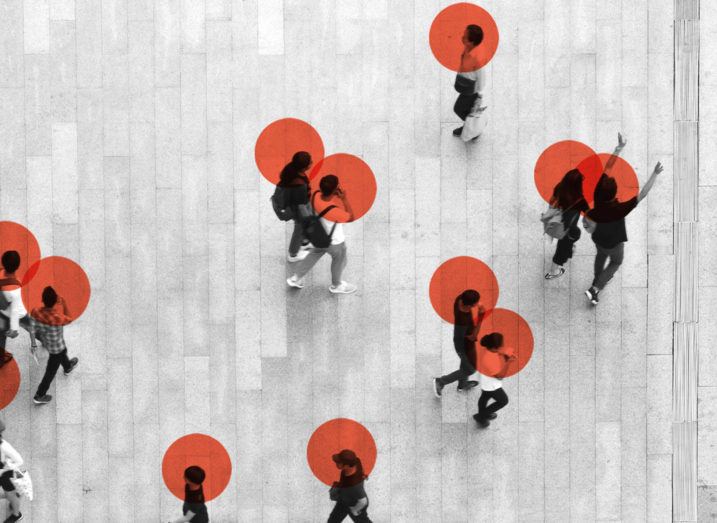

Some forces are using what’s called ‘live facial recognition’. This usually involves parking vans equipped with the tech on busy shopping streets or outside stadiums and train stations, scanning the hundreds of thousands of us who come within range of the police cameras on the vehicles’ roofs, attempting to match our faces in real time to images on secretive watchlists.

In July 2025, the Met announced it will double its number of weekly live facial recognition deployments. And in August, the Government announced a new fleet of 10 facial recognition vans to expand its use across the country. It has since been used in Bedford and Manchester.

But some forces – including South Wales Police, the force which lost the Bridges case – are going even further. South Wales has tested setting up networks of facial recognition cameras across Cardiff for specific events like the Six Nations rugby. This massively expands the facial recognition area when the tech is used and leads to thousands more people being scanned.

Hammersmith and Fulham has approved plans for live facial recognition cameras across ten locations in the London borough. And the UK’s first permanent facial recognition cameras went live in Croydon in South London in October 2025.

South Wales Police has also been trialling facial recognition technology on officers’ phones, enabling them to scan and potentially instantly identify anyone they come into contact with. This is known as ‘operator-initiated facial recognition’ and is expected to now spread to more police forces.

Other forces have software allowing the police to identify everyone in any pictures and footage they can get their hands on – from CCTV cameras to what we post on our social media accounts. This is called ‘retrospective facial recognition’, because the images and videos already exist, rather than using face-scanning cameras in real time like ‘live facial recognition’ does. Hammersmith and Fulham has approved plans for 500 CCTV cameras to be equipped with retrospective facial recognition capabilities, storing all footage to allow searches to find all locations where a given face can be identified.

After years of police using facial recognition without proper laws or safeguards, the Home Office has finally launched a public consultation – giving us all the opportunity to have our say. The Government must halt the rapid rollout of facial recognition technology and make sure there are safeguards in place to protect each of us and prioritise our rights. If you would like to hear more about how to take part in the consultation, let us know by signing up here.

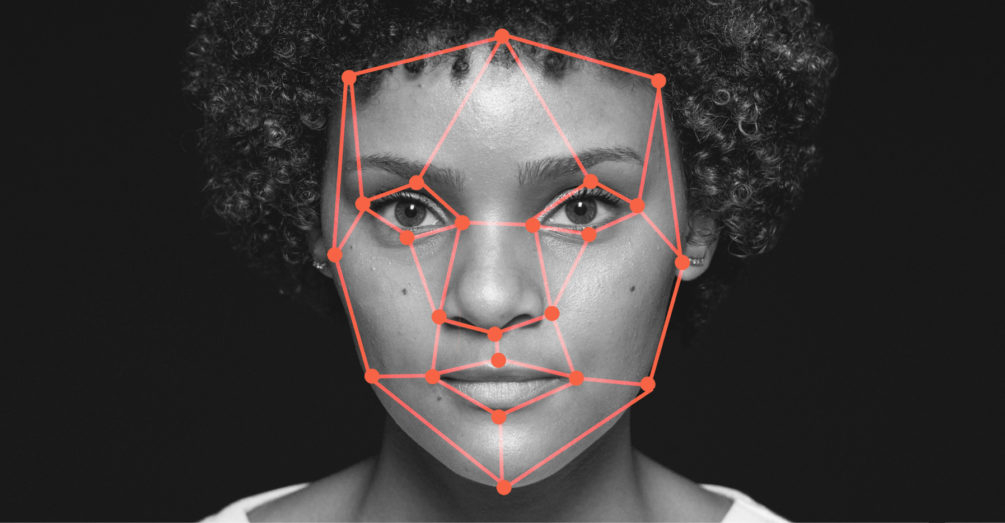

HOW DOES FACIAL RECOGNITION WORK?

Facial recognition works by matching the faces of people within range of special cameras (in the case of ‘live’ and ‘operator-initiated’ facial recognition) or which appear in pictures and video footage (in the case of ‘retrospective’ facial recognition) to images of people on a watchlist.

It does this by ‘mapping’ the distinct points of our faces and allocating a numbered code to that map. It then compares that code to the codes assigned to faces on the watchlist.

In Ed Bridges’ case against South Wales police, documents given to the court showed that the watchlists can contain pictures of anyone, including people who are not suspected of any wrongdoing, and the images used can come from anywhere – even from our social media accounts.

Why should we be concerned?

In Ed Bridges’ case, the Court said South Wales Police’s use of live facial recognition violated privacy rights and broke data protection and equality laws. This was partly due to the level of discretion officers had when choosing where to use the tech.

But despite Ed’s victory, the Government and police have forged ahead, infecting every aspect of our lives with facial recognition that can be used to ID and track any one of us.

It’s been used at the football, the rugby and Formula 1, at music festivals, and even at the seaside. It’s been used as a tool of intimidation at protests. And it’s being used in shops with information passed to the police to track down people forced to shoplift during a cost-of-living crisis.

And there simply isn’t any telling how and when police are using retroactive facial recognition to identify countless people in any image or footage they hold.

Liberty Investigates and i news discovered that Metropolitan Police computers in London had accessed a search engine tool called Pimeyes thousands of times. Pimeyes allows users to upload photos and identify where images of an individual appear elsewhere on the internet.

Liberty Investigates and the Telegraph also revealed that police forces have run hundreds of facial recognition searches against the passport database which contains the images of 46 million people, and police forces are using facial recognition to track children as young as 12.

Facial recognition changes what it means to simply live our lives.

We want to be able to see our favourite music artists on tour, go to the football, take road trips, and make memories with our loved ones safely without being monitored.

But they want the ability to watch anyone they choose, wherever we go.

After years of high-profile scandals involving violent, racist and sexist police forces in the UK, trust in officers is at an all-time low. Facial recognition that can ID and track down all of us is dangerous tech – and it’s being used without any safeguards.

And history tells us that surveillance tech will always be disproportionately used against communities of colour. While facial recognition is known to misidentify Black people – meaning if you are black you are more likely to be stopped, questioned and searched by police. The Metropolitan Police has often used it in ethnically diverse areas and at events likely to be highly attended by people of colour, including Notting Hill Carnival.

What are we calling for?

There are simply no rules or laws governing police use of facial recognition in the UK – it’s a regulatory Wild West.

Other countries are putting laws in place to limit the dangerous effects of this technology. We need to see urgent action from the Government to introduce safeguards to limit how the police can use facial recognition, and to protect all of us from abuse of power as we go about our daily lives.

The Government’s public consultation is our chance to hammer home the case for laws to ensure:

- live facial recognition is not used without independent sign-off

- police can only use live facial recognition to:

- search for missing persons or victims of abduction, human trafficking and sexual exploitation

- prevent an imminent threat to life or people’s safety

- search for people suspected of committing a serious criminal offence

- facial recognition watchlists only contain images strictly relevant to the purposes above

- police give the public at least 14-days advance warning of live facial recognition deployments

I'm looking for advice on this

Did you know Liberty offers free human rights legal advice?

What are my rights on this?

Find out more about your rights and how the Human Rights Act protects them

Did you find this content useful?

Help us make our content even better by letting us know whether you found this page useful or not