Facial Recognition

Facial recognition technology is being used by police and private companies in publicly accessible places. It breaches everyone’s human rights, discriminates against people of colour and is unlawful. It’s time to ban it.

What’s happening?

Several police forces have used live facial recognition surveillance technology in public spaces since 2015, scanning millions of people’s faces.

In 2020, Liberty client Ed Bridges won the world’s first legal challenge to police use of the tech. The Court said South Wales Police’s use of intrusive and discriminatory facial recognition violates privacy rights and breaks data protection and equality laws.

But despite the court ruling, several police forces have reaffirmed their commitment to it and are looking for ways around our court win. Private companies are also using the tech in publicly accessible places like shopping centres and train stations.

WHAT IS FACIAL RECOGNITION?

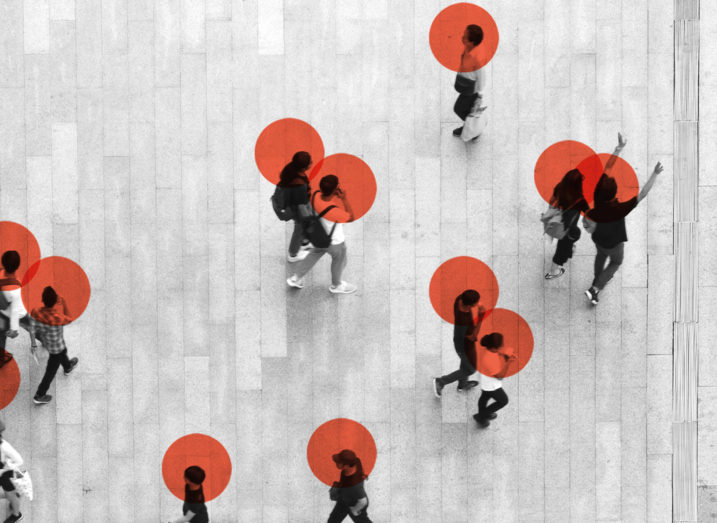

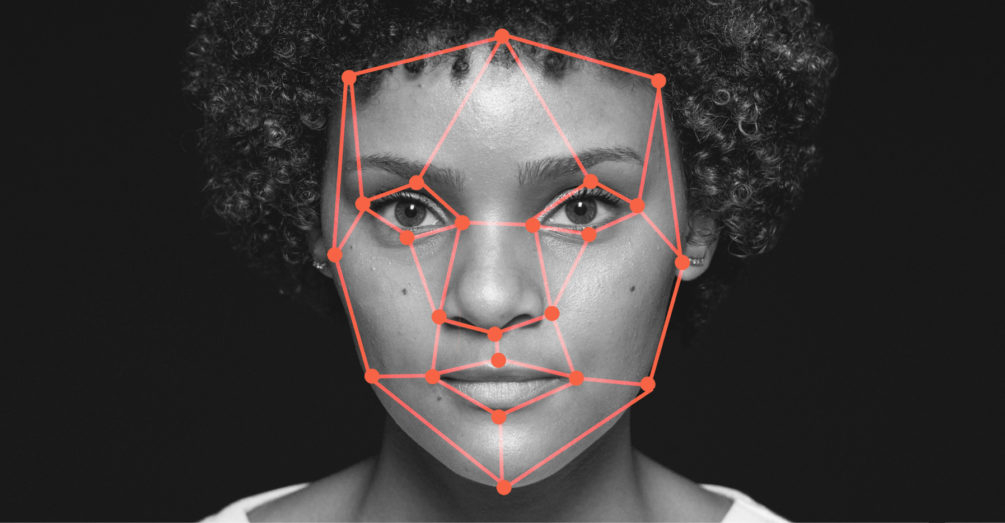

Facial recognition works by matching the faces of people walking past special cameras to images of people on a watch list. It does this by scanning the distinct points of our faces and creating biometric maps – more like fingerprints than photographs.

Everyone in range is scanned and has their biometric data (their unique facial measurements) snatched without their consent.

The watch lists can contain pictures of anyone, including people who are not suspected of any wrongdoing, and the images can come from anywhere – even from our social media accounts.

South Wales Police and the Metropolitan Police have been using live facial recognition in public for years with no public or parliamentary debate.

They’ve used it on tens of thousands of us, everywhere from protests and football matches to music festivals, and even just busy streets.

Some private companies have also used the tech in publicly accessible places including King’s Cross in London, and the Trafford Centre in Manchester.

The police are supposed to protect us and make us feel safe – but I think the technology is intimidating and intrusive.

Why should we be concerned?

Our court victory against South Wales Police shows that the lack of safeguards over whose image is included on a watch list violates everyone’s right to privacy.

The Court also said the level of discretion officers have when choosing where to use the tech contributes to a breach of privacy rights.

But the Metropolitan Police, has confirmed its intention to continue using the tech despite admitting to just deploying it in busy areas where it can scan as many people as possible, and previously using it to track people experiencing mental health crises who were not wanted by the police.

Other police forces will follow the Met if facial recognition use is not stopped for good.

And history tells us that surveillance tech will always be disproportionately used again communities of colour. While facial recognition is known to misidentify Black people – meaning if you are black you are more likely to be stopped, questioned and searched by police. The Metropolitan Police has often used it in ethnically diverse areas and at events likely to be highly attended by people of colour, including Notting Hill Carnival for two years running.

What are we calling for?

Creating law to govern police and private company use of facial recognition technology will not solve the human rights concerns or the tech’s in-built discrimination.

And making the tech more accurate, meaning we could all be identified and tracked in real time, can never be viewed as the solution – especially as police will disproportionately use it against communities of colour.

The only solution is to ban it.

I'm looking for advice on this

Did you know Liberty offers free human rights legal advice?

What are my rights on this?

Find out more about your rights and how the Human Rights Act protects them

Did you find this content useful?

Help us make our content even better by letting us know whether you found this page useful or not